Tutorial: Deploy Tempo HA on top of COS Lite

The Tempo HA solution consists of two Juju charms, tempo-coordinator-k8s and tempo-worker-k8s, that together deploy and operate Tempo, a distributed tracing backend by Grafana. The solution can operate independently, but we recommend running it alongside COS Lite.

You can also deploy the Canonical Observability Stack terraform module which already contains Tempo HA alongside the other observability charms. See more: Getting started with COS on Canonical K8s

Prerequisites

This tutorial assumes you already have a Juju model with COS Lite deployed on a Kubernetes cloud. To deploy one, follow the COS Lite tutorial first. If you just want to test out deploying Tempo, you can omit this part, however your Tempo instance would need to be accessed using juju ssh. If you don’t know how to do it, you likely will benefit more from going through the COS Lite tutorial first.

Tempo charm uses an S3-compatible object storage as its storage backend. In most production deployments, it either means deploying Ceph or connecting to an S3 backend via the s3-integrator charm. For testing and local development, you can use the minio charm.

To deploy and configure Minio to provide an S3 bucket to Tempo, you will need to follow this guide.

In the following steps we will assume that you have, in a model called cos:

- a COS Lite deployment

- an application called

s3providing ans3endpoint.

Deployment

You can use Tempo’s terraform module to deploy Tempo HA in distributed mode.

In your Terraform repository, create a tempo module:

module "tempo" {

source = "git::https://github.com/canonical/tempo-operators//terraform"

model = var.model

channel = var.channel

s3_endpoint = var.s3_endpoint

s3_access_key = var.s3_access_key

s3_secret_key = var.s3_secret_key

}

Add relevant input variables to your variables.tf, then run terraform apply.

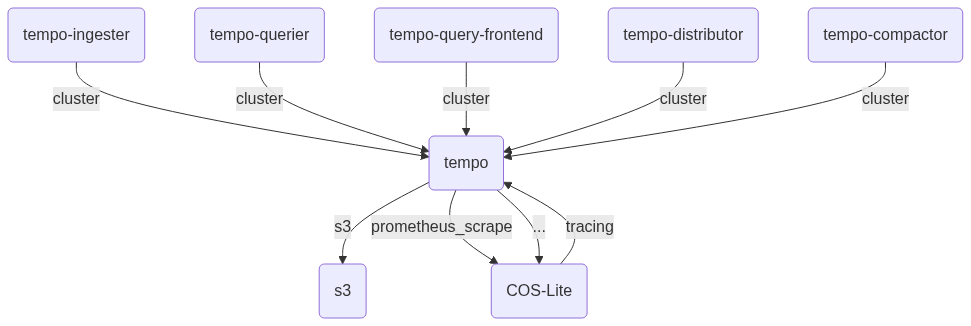

This will deploy a Tempo cluster with all required and optional roles: ingester, querier, query-frontend, distributor, compactor, metrics-generator, assigning each worker a dedicated role.

See more: Available inputs to configure Tempo’s terraform module.

See more: Recommended scale for each role.

Integrating with COS

After deploying, you need to integrate Tempo with the rest of Canonical Observability Stack.

The same relations can also be defined as part of Terraform code.

juju integrate tempo:ingress traefik:traefik-route

# if you want to set up tracing for COS Lite charms and the workloads that support it:

juju integrate tempo traefik:charm-tracing

juju integrate tempo traefik:workload-tracing

juju integrate tempo loki:charm-tracing

juju integrate tempo loki:workload-tracing

juju integrate tempo grafana:charm-tracing

juju integrate tempo grafana:workload-tracing

juju integrate tempo prometheus:charm-tracing

juju integrate tempo prometheus:workload-tracing

# grafana datasource integration

juju integrate tempo:grafana-source grafana:grafana-source

# self-monitoring integrations

juju integrate tempo:grafana-dashboard grafana:grafana-dashboard

juju integrate tempo:metrics-endpoint prometheus:metrics-endpoint

juju integrate tempo:logging loki:logging

Monolithic deployment mode for testing and dev

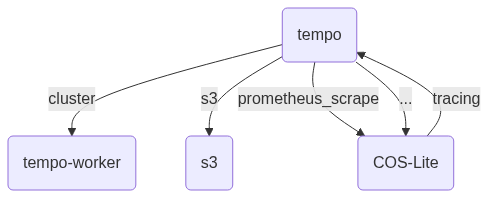

To deploy Tempo HA in monolithic mode, where a single worker node runs all components of distributed Tempo, you need to deploy a single instance of the tempo-coordinator-k8s charm as tempo and a single instance of the tempo-worker-k8s charm as tempo-worker.

Integrate them over cluster and integrate the tempo application with s3. At that point tempo should go to active status.

code

juju deploy tempo-coordinator-k8s --channel 1/stable --trust tempo

juju deploy tempo-worker-k8s --channel 1/stable --trust tempo-worker

juju integrate tempo tempo-worker

juju integrate tempo s3

juju integrate tempo:ingress traefik:traefik-route

# if you want to set up tracing for COS Lite charms and the workloads that support it:

juju integrate tempo traefik:charm-tracing

juju integrate tempo traefik:workload-tracing

juju integrate tempo loki:charm-tracing

juju integrate tempo loki:workload-tracing

juju integrate tempo grafana:charm-tracing

juju integrate tempo grafana:workload-tracing

juju integrate tempo prometheus:charm-tracing

juju integrate tempo prometheus:workload-tracing

# grafana datasource integration

juju integrate tempo:grafana-source grafana:grafana-source

# self-monitoring integrations

juju integrate tempo:grafana-dashboard grafana:grafana-dashboard

juju integrate tempo:metrics-endpoint prometheus:metrics-endpoint

juju integrate tempo:logging loki:logging

Add dedicated worker roles

You can turn the monolithic deployment you have now in a distributed one by assigning some or all Tempo roles to individual worker units. See this doc for an explanation of the architecture, and this document for reference of the tempo roles.

For Tempo to work, each one of the required roles must be assigned to a worker. This requirement is trivially satisfied if a worker has the role all.

So, without removing the tempo-worker application, we are going to start by adding an ingester worker node. Deploy another instance of the tempo-worker-k8s charm as tempo-ingester and add it to the same tempo coordinator instance to have it join the cluster. Configure the ingester application, as you deploy it or afterwards, to only have the ingester role enabled.

code

juju deploy tempo-worker-k8s --channel edge --trust tempo-ingester --config role-all=false --config role-ingester=true

juju integrate tempo tempo-ingester

Wait for the worker to go to active. Now you have two worker nodes running the ingester component, and one node also running all other components.

Repeat the above step for all other required roles: querier, query-frontend, distributor, compactor, and when you’re done, remove the tempo-worker application.

Each of the required roles initially taken by the tempo-worker application has now been transferred to the new worker instances.

Further reading

Last updated 4 months ago.